The simulator was developed by Gábor Balázs during his teaching assistantship for the Experimental Mobile Robotics course of Csaba Szepesvári in 2011-2012. The goal of the course was to teach system identification, the basics of control and robot localization by particle filtering based on known terrain maps.

In the simulator, one can control a robot segway on a flat terrain. The design of the robot is based on the HiTechnic HTWay robot built using the LEGO Mindstorms NXT kit. The robot is equipped with a HiTechnic gyroscope sensor, two LEGO NXT motors and three Mindsensors DIST-Nx medium range infrared (IR) distance sensors. In the simulator, the gyroscope is modeled as a rate gyroscope. The motion dynamics of the robot is derived based on the Euler-Lagrange equations using DC motor dynamics. These differential equations are approximately solved by a fourth-order Runge-Kutta method. The distance sensors are simulated by making the true distance values noisy using a Gaussian distribution. In real, the accuracy of this IR sensor model is reasonable only on the 5-35cm range. So we cut down the sensor readings in the simulator too to provide the same challenges for particle filtering.

The controller is split to robot and PC parts, which communicate with each other by message passing. Such a communication scheme (via Bluetooth) is necessary for the real robot as the NXT brick cannot perform computationally expensive tasks, such as particle filtering. Hence, the simulator implements such a message passing scheme, making it easier to adapt controllers for the real robot later.

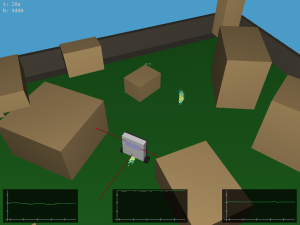

The robot part of the controller is an adaptation of the HiTechnic HTWAY PID controller. This can track the user input and drive around the robot on a flat terrain among boxes (no collision detection). Meanwhile, the PC part might localize the position of the robot based on the IR sensor measurements and the knowledge of the map using a KLD-sampling adaptive particle filter. The simulator has 3D visualization with OpenGL showing the position and orientation of the robot from a changeable camera view. When particle filtering is performed, the locations of the particles are also shown with their likelihood (lighter is more likely). In this case, the IR sensor readings (green) and the related values of the most likely particle (red) are plotted at the bottom of the screen. Furthermore, the top left corner presents the elapsed time in seconds and the (adaptive) number of particles (N).

The following videos demonstrate particle filter based localization: video 1, video 2 and video 3. They were recorded using the real robot, but it looks the same in the simulator, except there the robot's true position is shown (in real this information is not available, so on the videos the most likely particle is shown instead). The "Searching..." phase at the beginning searches through all positions and orientations (yaw angle only, the pitch angle is assumed to be close to zero, i.e., robot is close to vertical), and keeps the 10,000 most likely configurations (particles) to initialize the filter.